Implementation of new trading system

Business Case #1

A commercial bank had become a member of a Danish banking Data Processing Center (DPC). For different reasons, the bank did not want to use the DPC’s existing stock exchange trading solution in relation to domestic fixed income instruments and equities. Instead, the customer preferred a foreign supplier offering a considerably more advanced trading system which had the ability to integrate with the Copenhagen Stock Exchange/Nasdaq/OMX (and, in addition, a large number of international stock exchanges).

Objective

The objective, therefore, was to ensure that the new trading system would be able to integrate with the DPC’s existing back-end accounting and settlement system, so that domestic stock exchange trades could be executed and settled from both the bank’s branches and in their internet bank.

System APIs

Both systems exposed an API through which trades could be managed. While the back-end accounting and settlement system was based on a limited number (~30) of messages/transaction types in an IBM/WebSphere/MQ architecture, the trading system contained more than 200 different message types processed over TCP sockets using a name/value structure (e.g. “ISIN= DK0009922320”).

Message Content and Types

Since the messages/transaction between the two systems types had different semantic content, the underlying technical implementation was also markedly different. Both systems, however, had to support both synchronous (e.g. a trade sent) as well as asynchronous transactions (e.g. a trade received).

Reconciliation and Auditing

For both business and auditing reasons, it was required that some sort of reconciliation and monitoring functions were established, to be able to reconcile, and possibly identify, problematic transactions (e.g. that a trade order initiated in the internet bank was in fact executed in the trading system and was subsequently settled and booked on the customer’s account).

Development and Test Environments

To facilitate the development project, the foreign supplier provided a UNIX based development server, while the DPC established a test environment where settlement and accounting transactions would be tested.

Neither the DPC, the DPC bank customer nor the foreign supplier had any requirements as to the execution platform or system environment, only that a solution implemented in a Microsoft universe would be preferred.

SEE ALSO: Robotics software

System description

On a high level the objective was to develop and implement a two-way module or adapter able to communicate – in real-time – between two separate systems without changing any of the existing systems.

This adapter could be developed in a number of different ways, but a requirement would be to establish a fundamental data format both systems would be able to understand. Since the adapter was to be implemented on a single machine/server, a binary format was therefore a natural choice.

Message Types

Because of the differences between the two systems’ message types and contents, it would be necessary to specify the smallest number of messages required to support the functions in both the bank branches and the internet bank.

When the message types had been identified (and the mappings from one system to the other), the next step would be to design a single class per system per message type, and in each class have a method or function able to translate from one system to the other (using the intermediate binary format).

Message Implementation

Since single messages/transactions from the two systems were to be converted to the equivalent binary classes, it would be necessary to translate the messages (in text format) to their corresponding classes. Because a substantial number of messages existed (200+), a 1:1 translator was quickly discarded in favor of a “parser generator” able to generate executable code for parsing the text based message data.

Message Parsing

To develop the parser generator the ANTLR open-source system was selected.

ANTLR operates on a relatively short syntax description fed into the system, upon which the system generates – in this case – C# code that performs the actual parsing (resulting in a C# class representing a given message in binary format). In the ANTLR syntax description, you specify what action to perform when a given symbol or token occurs in the input text (e.g. that a trade object is to be created, that the object is assigned an ISIN property with an appropriate value, etc.).

Architecture

By collecting, structuring and deploying data fields across both systems, the entire parsing code could be substantially reduced compared to a system where each class would be responsible for the conversion between binary and text formats.

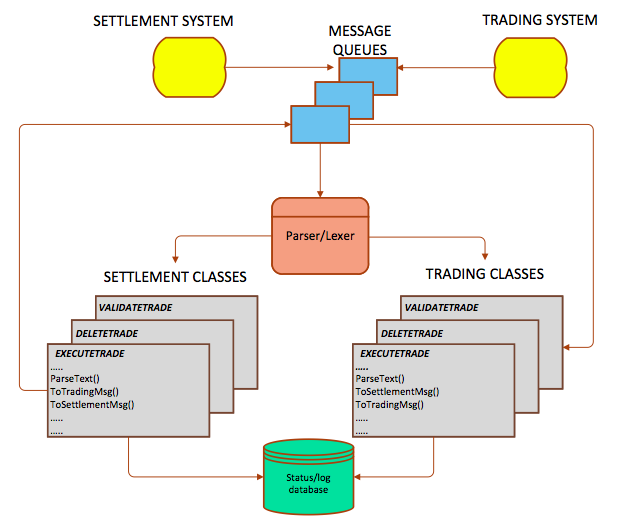

The overall architecture is depicted in the following diagram:

Queues

As temporary message data stores, a number of .NET queues were established because the system sub-components have different processing duration, and because this architecture would be better at supporting higher transaction volumes.

Thread-Pools

Similarly, five different .NET thread-pools were created from where individual processing threads could be acquired on an as-needed basis. As is the case with message queues, thread-pools ensure a markedly higher performance for the system in general, but are more complex to implement given extensive synchronization issues.

Message Flow

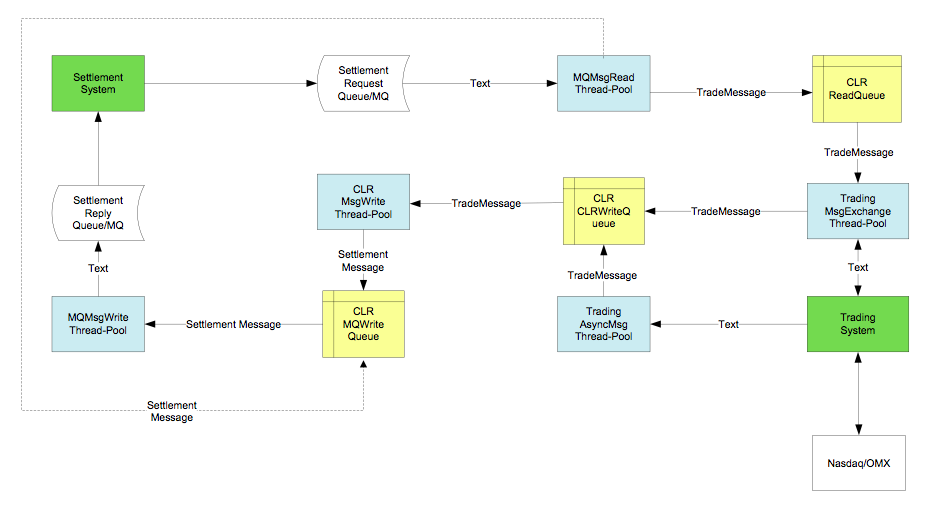

The entire message flow is illustrated in the following figure:

“Text” specifies messages in the original text format, “TradeMessage” messages in trading system format, while “SettlementMessage” specifies messages in the Settlement system format.

The yellow and white boxes represent message queues as described above, while the light blue processes specify thread-pools – i.e. represent the actual data processing logic enabling the conversion of messages between the two formats, the transfer of messages between queues, the logging and reconciliation of messages, etc.

Performance

Deployed on a single server, the system had an overall performance of more than 100 transactions per second, which was more than adequate for a single customer. Because of the loosely coupled architecture, it was possible to supply additional servers without changing almost anything but configuration options. As such, the system was highly scalable and could be fine-tuned by modifying the number of threads in each pool.

SEE ALSO: CMP case: How AP Pension reduced their annual accounting reporting time from weeks to days

Implementation

The system was developed in C# using MS Visual Studio and MS SQL Server, and was deployed under .NET in Windows. The system was delivered as a Windows service with configurable opening hours, and included extensive logging mechanisms supporting a number of different media (Windows event log, log files, DBMS, SNMP, etc.). To monitor the message flow, a Windows Forms based application was developed using Developer Express’ GUI components. The entire code base comprised more than 40,000 lines of code and was developed using a resource requirement of six person-months.

Michael has more than 25 years of experience as a business and IT consultant, with primary focus on financial institutions.

From an early start, Michael has been involved in IT development and gradually moved into more technical IT implementation. He has extensive experience with IT, and has worked with a number of different platforms and database systems.

Michael is part of CMP’s team of strong technical IT consultants who provide our customers with key technical expertise across a wide range of technical challenges.

Read more about Michael and our other CMP consultants here.