Amortization of loan fees

Business Case #2

A Danish banking Data Processing Center (DPC) had entered into a collaboration with a foreign supplier on development of Basel II functionality for the DPC’s banking customers. Since the supplier had extensive experience with – and software for – management of a wide range of financial instruments, the company was also selected to develop a system for the amortization of major loan fees in accordance with IFRS/IAS specifications.

In addition, the system had to produce more than 50,000 Excel spreadsheets to be used by banking customers for analyzing loan write-offs and depreciations. The calculations were to be performed on a monthly basis – typically during weekends because total processing duration exceeded a full day.

Objective

To this end, the supplier established a deployment system consisting of five Windows based calculation servers and an Oracle DBMS server. In addition, the DPC had developed data extraction software able to retrieve – from a Data Warehouse – the instrument data to be included in the IFRS/IAS calculations and the loan write-offs. The result of the monthly calculations (amortized monthly loan fee contribution for each loan) was subsequently to be stored in a text file returned to the host system for account processing.

Planned Solution

Because a large number of DPC member banks were involved (100+), the supplier planned to deploy the calculations in parallel on the five calculation servers all having access to the shared Oracle DBMS.

Since the supplier had planned to use existing components from its current software portfolio, the supplier decided to perform the calculations in a number of Windows batch jobs, where each job delivered input data to a calculation server and subsequently received the result for further processing. The implemented solution was later tested over a one-month pilot period.

Problems

Using this procedure, however, proved to be exceptionally challenging: frequently, the calculations were not able to complete within the allocated time slots, and error processing turned out to be almost impossible. Every processing step in the supplier’s standard modules produced a log file, and since the overall processing consisted of many steps, hundreds of log files were produced, none of which were in a standardized format.

To further complicate matters, erroneous input data (from the DPC’s Data Warehouse), together with periodic errors in the supplier’s modules, meant that the executing batch jobs frequently crashed and the entire execution terminated mid-process for a number of member banks. Finally, the existing software modules were not able to calculate amortized fees correctly.

Alternative Solution

Clearly, this situation was unacceptable. There were no realistic plans for the current system to be able to fulfill the original objective, and no immediate ways in which the problems could be resolved.

To overcome the current problems, it quickly became clear that radical changes were required. A number of options were considered, including the purchase of alternative software modules, restructuring of the current system, etc. Instead, it was decided to discard the current system altogether in favor of developing a new system from the ground up, but utilizing the existing Data Warehouse retrieval and host processing modules.

Requirements

Because a lot of the Data Warehouse data was inadequate (and frequently erroneous), a new system had to be able to generate cashflows for all instruments included from the Data Warehouse (a number of different loan types and guarantees). In addition, the system had to be able to calculate exact IRRs on the instruments because the amortizations use the “effective interest method” for calculating monthly fee contributions.

Finally, it would be necessary to implement a more structured deployment approach where errors were logged and managed, the execution could be continuously monitored, individual member banks could be reprocessed, etc.

System Requirements and Description

From an overall perspective, it was necessary to develop a system able to perform the following tasks:

- Read and parse text files containing financial instrument data, exchange rates, etc. from the host system to individual objects, self-contained objects and/or DBMS records

- Generate cashflows for more than 400,000 financial instruments from input parameters

- Calculate IRRs on all cashflows

- Amortize loan fees and calculate monthly fee contributions using the “effective interest method”

- Store and maintain loan fee amortizations, balances, etc. for all required customer accounts

- Generate output files with accounting transactions and forward to host system

- Group and populate loan payments in predefined time buckets for subsequent manual loan depreciation execution

- Generate and populate more than 50,000 Excel spreadsheets

- Process calculations for more than 100 banks in a robust and stable system environment

- Generate extensive status and error reports for each bank together with a consolidated report for all banks involved

For strategic reasons, the DPC wanted the system to be developed in a .NET based Microsoft architecture while employing the already paid for Oracle license.

Data Model and Database

To support the requirements it would be necessary to develop a comprehensive data model – and subsequent database – containing a number of tables for storing data on instruments, payments, counterparts and execution, as well as log files, error messages, etc. The data model and the ensuing database was developed using the ER/Studio tool from Embarcadero.

Database Access and Performance

Because of the relatively time consuming calculations – and the storage of instrument data with a high level of detail – database performance would be critical. Consequently, optimal database access would be a risk and focus area, which is why .NET’s Oracle driver was discarded in favor of Oracle’s ODP driver that had significantly higher general performance and support for array inserts. Array inserts allow for e.g. the insertion of payments for 50 loans at a time – in a single database network trip – as opposed to inserting 500 – 1000 individual payments each requiring a database network trip. The use of DBMS array processing often entails a dramatic performance improvement (typically by an order of magnitude), but requires more extensive system development (e.g. for error processing).

Data Load

To process loan input data from the host system, it was necessary to develop a general parser/generator which was able to generate instrument classes – with associated database contents – from text parameters.

Loan Calculations

As for the actual loan calculations, a large number of modules and classes were developed – e.g. for counting the number of days in time intervals using a specific date count convention, enumeration of business days, calculating IRR values, generating cashflows for fee amortization, etc.

Spreadsheet Generation

To generate the Excel based depreciation spreadsheets, the GemBox.Spreadsheet .NET component was used. Bypassing the regular Excel OLE automation interface (as used in the original supplier solution), the Gembox component was an order of magnitude quicker than the old system.

Status and Error reporting

When the entire execution had completed, the system was able to forward Excel based status reports (containing number and types of loans processed, total amortization contribution, error and warning reports, etc.) to each participating member bank.

System Architecture

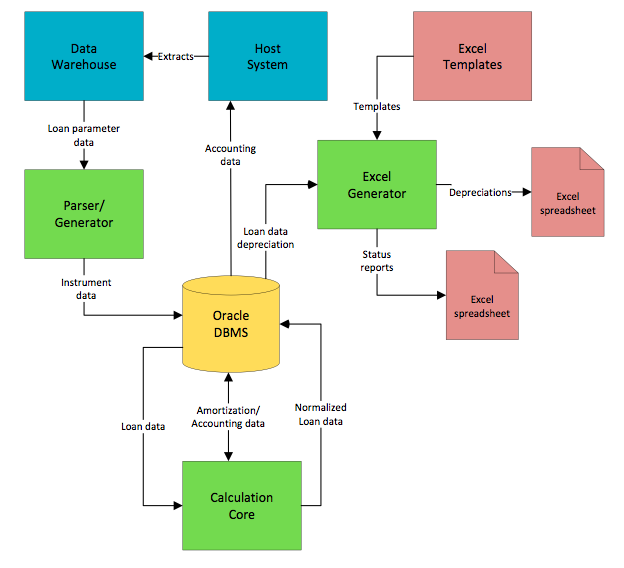

Graphically, the system may be illustrated as in the following figure:

Execution Modes

The application managing the overall execution was able to run in two modes: a GUI mode where individual member banks could be selected and processed, and the current processing could be monitored, and in batch mode allowing for automatic process initiation and execution from the host scheduling system.

Development Resource Requirements

The GUI was developed using Developer Express .NET components.

Two people developed the entire system – in C #/.NET using MS Visual Studio – in 6 calendar months.

Added Value

By abandoning and scrapping an already planned – and partly implemented – system, in favor of developing a new bespoke system from the ground up, the DPC was able to obtain a number of advantages:

- Enabling execution within available timeframes

- Execution without system crashes and data modifications

- Execution using only a single calculation server as opposed to previously five servers entailing considerable hardware/software savings

- Substantially more accurate and correct calculation data

- Significant savings on deployed software licenses and annual maintenance costs

- Generation of detailed error and log messages in structured formats

- User friendly deployment of complicated calculations and report generation

Venturing into a development project like the one just described of course was not a risk-free undertaking for the DPC, but from an overall cost-benefit perspective there is no doubt that it was a very lucrative investment.

Michael has more than 25 years of experience as a business and IT consultant, with primary focus on financial institutions.

From an early start, Michael has been involved in IT development and gradually moved into more technical IT implementation. He has extensive experience with IT, and has worked with a number of different platforms and database systems.

Michael is part of CMP’s team of strong technical IT consultants who provide our customers with key technical expertise across a wide range of technical challenges.

Read more about Michael and our other CMP consultants here.